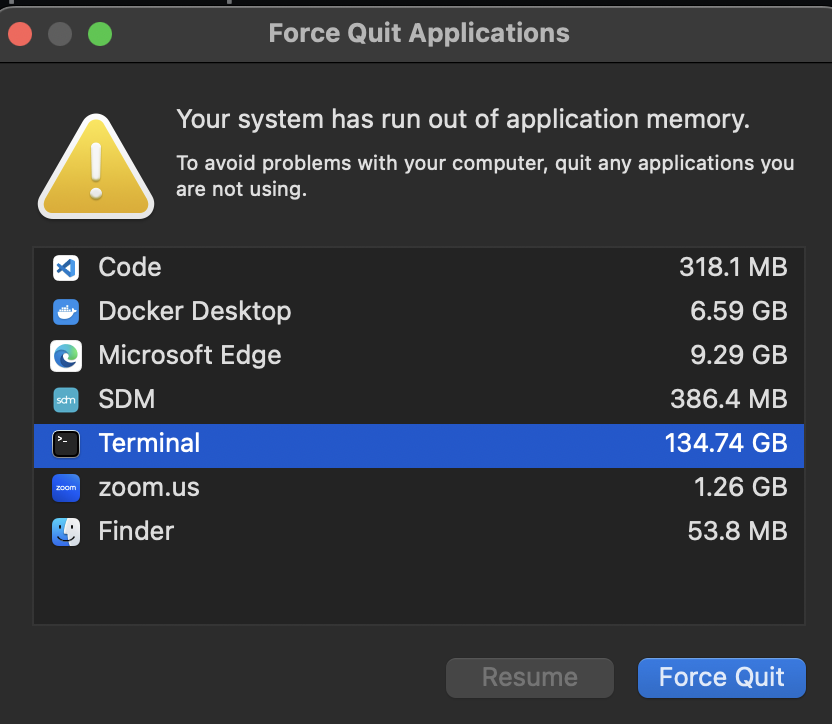

I use Microsoft Edge and need >134GBs of RAM for my terminal (yeah I vibe code)

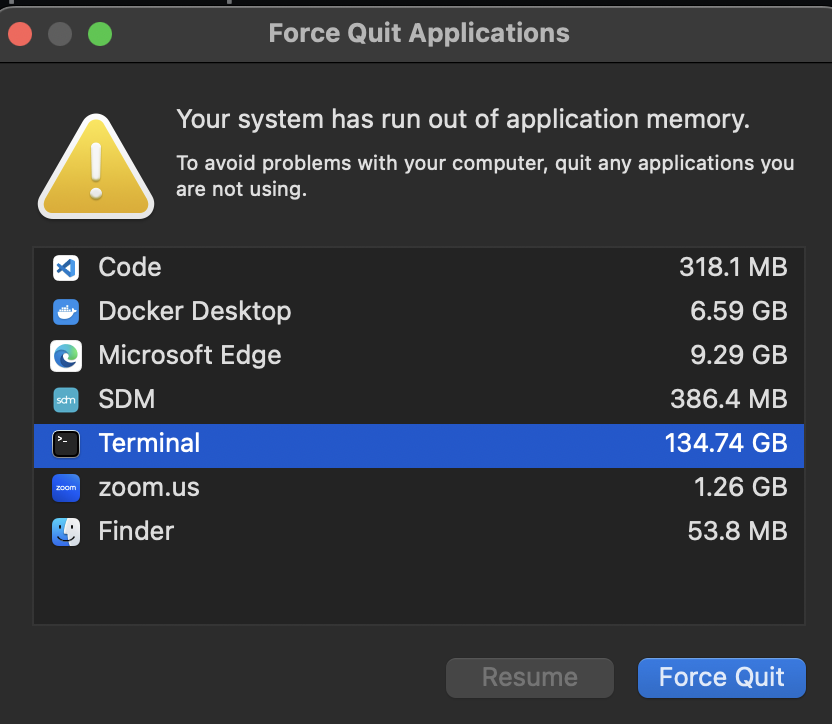

I use Microsoft Edge and need >134GBs of RAM for my terminal (yeah I vibe code)

OpenAI’s codex has demonstrated something I’ve struggled to for a while: we’ve been doing AI software engineering wrong. Or at least, there’s a better way with CLIs and files as the interface. What’s old is new again.

In this post, I demonstrate the core workflow for modern agentic software engineering that can be applied to pair-programming or automation via CI/CD:

task.md file with all relevant out-of-repo contextai "open the task.md and work on it"gh issue view NUMBER > task.md and such…Makefile or similar (I like justfile) and instructions your ai on using themYou can then leverage standard software development lifecycle (SDLC) best practices and GitOps for modern agentic software engineering.

I would like to abolish the term RAG and instead just agree that we should always try to provide models with the appropriate context to provide high quality answers.

This is important. Obfuscating how to use the language boxes with dumb jargon isn’t helpful.

- Cody

Looking at LLMs as chatbots is the same as looking at early computers as calculators. We’re seeing an emergence of a whole new computing paradigm, and it is very early.

- Cody

The bane of my existence:

InvalidRequestError: This model’s maximum context length is 32768 tokens. However, you requested 33013 tokens (31413 in the messages, 1600 in the completion). Please reduce the length of the messages or completion.

Somebody please give me GPT5-64k access.

See part 1.

The bane of my existence:

InvalidRequestError: This model’s maximum context length is 16385 tokens. However, your messages resulted in 28737 tokens. Please reduce the length of the messages.

Somebody please give me GPT4-32k access.

Updates:

See part 2.